Website audit is an integral part of managing a successful website. A thorough site audit helps you ensure that your SEO efforts are producing the desired results.

A site audit is a comprehensive analysis of all the elements that determine your website’s visibility in search engines. It enables you to identify the problems in your website that are leading to a lower conversion rate, higher bounce rate, and overall decrease in revenue generation.

To expedite your online business profitability, you should conduct regular audits to rectify any issues, improve search engine rankings and direct greater inbound user influx.

Audit strategy:

Auditing is a complex process that can help you improve numerous aspects of your website. Even though the primary aim of an SEO audit is to enhance a site’s search engine visibility, you need to determine the key factors that you want to analyze.

For example, you may want to conduct an audit to gain deeper insight into your competition, improve your website’s technical functioning or understand the effectiveness of keywords, among other things.

Therefore, you need to establish a feasible audit strategy based on the goals you want to achieve and implement to improve the overall performance of your website.

For a perfectly optimized website, you need to perform the following analysis:

1. Technical

2. On-site

3. Off-site

4. Competitive

1. Technical analysis:

Technical analysis determines whether your website is performing properly or experiencing issues that can hurt user experience and your search rankings.

There are numerous variables that are evaluated as part of the technical analysis that can be divided into two broad categories:

– Accessibility

– Indexability

Accessibility:

As evident by the name, accessibility refers to whether search engines or users can access your site or not. If your site is not accessible, you will immediately lose your traffic and rankings. Evaluate the following list of things to make your website more accessible:

Robots.txt:

The robots.txt file is a part of your website’s source code. It is used to restrict search engine crawlers from crawling or accessing sections of your website that carry a disallow tag in their URLs.

However, sometimes the file inadvertently blocks parts of your website that you do not want to make inaccessible to the search engines.

To ensure that robots.txt files are not restricting any important sections of your website, manually check all the files and configure them correctly.

Robot Meta tags:

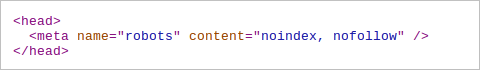

The robot meta tag indicates whether the search engine bots are allowed to index a particular page or not. The noindex meta tag inhibits the crawlers from accessing a page that carries the tag, and indexing it.

An SEO audit determines the presence of the unwelcome meta tags when analyzing the accessibility of the website and its sections.

XML Sitemap:

XML Sitemap is another important factor that can affect the accessibility of your website. The XML Sitemap works as a literate map, which guides the crawlers to all the pages of your website.

Format the Sitemap properly, according to the Site protocol and submit it to the Webmaster Tools. Make sure the Sitemap includes all the pages on your website and new pages are included in the site architecture.

Website architecture:

A website is divided into different levels. Your site’s architecture outlines the overall structure of your website, including all the various levels.

To analyze the architecture of your website, determine the number of clicks it takes to travel from the homepage to the destination page. Make sure all the important pages have a high priority i.e. they are accessible within a few clicks. This makes it easier for crawlers to access the page.

HTTP status code:

If the URL of the corresponding page returns errors, the users and search engine crawlers are unable to access it.

Conduct a site crawl to review and fix URLs that return an error. Redirect the broken URLs, those that do not have its corresponding page on the website anymore due to deletion or relocation, to relevant pages using 301 HTTP redirects.

Site performance:

The main aim of site performance optimization is to enhance user experience and to ensure the search engine spiders are able to crawl the entire site within the allocated time.

If your website takes more than 3 seconds to load, 40% of the users will abandon it. Moreover, sites that load quickly are crawled more thoroughly and regularly as compared to slower sites.

Mobile optimization:

More and more people are using mobile devices to access the internet and conduct online searches. According to a recent report, 60% of online searches are performed using mobile devices.

In addition, Google considers mobile optimization to be an important ranking factor.

Therefore, it is imperative to make your website mobile-friendly. Provide users with the same experience, if not better, as they would have experienced accessing your site from a desktop.

You can use Google’s mobile-friendly check to analyze the mobile optimization of your site.

Indexability:

Indexability refers to the ability of a web page or site to be indexed easily once the search engine crawlers access them.

It is important to remember that only indexed pages or websites appear in search engine results. Therefore, it is very critical to determine any elements that may cause hindrance in the indexation process.

Often, the pages are not indexed due to accessibility issues, mentioned above, or other minor problems.

However, it is also possible that Google penalized your site. If this happens, you will notice that none of your pages are showing up in search results or receive a notification in your webmaster’s tool account. To recover from a penalty, implement the following steps:

1. Identify the reason: Before you can make amendments, you need to identify the main reason behind the penalty. If you received a message on the webmaster’s tool account, you must know the root cause of penalty. However, if your site was penalized due to a search engine algorithm update, check news sites related to SEO to determine the recent changes in algorithm.

2. Fix the site: This is easier said than done. You have to take a methodic approach to evaluate the reason for the penalty and fix it.

3. Request reconsideration: After fixing the problem, request reconsideration from Google. This is most effective if you were penalized for explicit reasons. Otherwise, if the penalty was due to an algorithm update, you will have to wait for the algorithm to refresh.

2. On-site analysis

Once you have ensured that both the search engine crawlers and users can access your website, you have to optimize the on-page factors that affect search rankings.

URLs:

URLs are required to access the content of a page. They also describe the contents of its corresponding page. Therefore, URLs need to be easy-to-understand, short (ideally less than 115 characters) and user-friendly.

They should include relevant keywords pertinent to their content, specific description of the content and hyphens to separate words.

Content:

Content creation is a very effective SEO tool. However, to leverage the power of creating content to garner better position on the search engine results, it should:

– Be at least 300-500 words long

– Contain the main keyword, which appears in the first few paragraphs; but refrain from keyword-stuffing

– Offer value to the readers; the users’ bounce rate and the time spent on the page can help you decipher if the content is engaging

– Not include grammatical or spelling mistakes

– Be related to the central idea of the website

– Be well-structured

Keywords’ cannibalization:

Keywords’ cannibalization refers to a situation where multiple pages of your website, often unintentionally, feature the same keyword. This can dilute the visibility of each of your pages targeting a similar keyword. Consequently, search engines choose the page, most relevant to the query, from all the pages with the same keyword.

To rectify the inadvertent keyword cannibalization, you can re-purpose the content to target other keywords. You can also use a 301 redirect on all the cannibalizing pages to link them back to an original, canonical source. Redirecting not only eliminates the problem, but also strengthens the link equity of the most relevant post for the query.

Duplicate content:

Duplicate content refers to similar content hosted by multiple pages, both internal (on-site) and external (off-site). Even though duplicate content is not deliberate, it plagues 29% of the sites.

You can identify internal duplication by conducting site crawl to accumulated clusters of duplicate content. For each of the cluster, you can designate the most relevant page as original and link back the other pages to the original post. You can also use canonical URLs or no-index meta tags on the duplicate content.

For off-site duplication, you can use tools like Copyscape.

Titles:

Titles are the key identifying features of a page that appear on search engine results and on social media posts. Therefore, it goes without saying, that they should be compelling and describe the contents of the respective page efficiently.

Moreover, titles should be concise (less than 70 characters), contain the targeted keyword and be unique for each page of your site.

Meta description:

Meta description is a tag in HTML that summarizes the contents of a page and accompanies the title. While meta descriptions do not affect search rankings directly, they play a pivotal role in click-through rates and conversion.

Effective meta descriptions are succinct, relevant and unique for each web page.

Images:

Pictures add substance to your content and make it more entertaining. They offer a welcome reprieve from big blocks of text. However, images are unable to contribute towards obtaining better search rankings unless they are optimized. Optimized images enhance the ranking of their host page and bring in more traffic that is organic.

Outlinks:

Link building is very useful for expediting your SEO efforts. When a page links to another, it validates the authority of the receiving page. Therefore, it is imperative to ensure you link your site to only high quality sites.

Evaluate your link profile to make sure that you do not link to spammy sites or pages that are irrelevant to your content. In addition, the anchor text of the link should contain the targeted keyword to make it most effective.

Finally, analyze your internal linking to make certain that the most important pages on your website enjoy the most linkbacks, making them easily discoverable to users.

3. Off-site analysis

Off-site ranking factors also play a defining role in securing an optimal ranking position. These refer to all the variables generated by external sources that affect the overall image of your website.

Trustworthiness

Site trustworthiness refers to its immunity to malware and spam. You should regularly analyze your site to protect it from malware that can compromise the user experience and divulge users’ personal data to hackers.

To avoid spam, you should refrain from keyword stuffing, hiding text, or cloaking.

In addition, avoid black hat SEO practices. Google is improving its algorithms constantly and manipulating the system can lead to penalties.

The trustworthiness of the website also depends on its link profile, which includes links it acquires from other sites and links that direct to other sites (more on this later).

Popularity

Popularity of your website may not be one of the most influencing factors in terms of search rankings,, but definitely affects your inbound user influx.

Popular sites are those that enjoy the most attention and, thus, better conversion. You can determine the popularity of your site by its user traffic, comparing it with other competitive websites and analyzing the authority of its backlinks.

Backlink profile

Previously, we discussed about outbound links and their effects on your website’s SEO. Similarly, the authority and trustworthiness of the site you acquire links from is also very important. Therefore, you should make diligent efforts to maintain a clean link profile.

Consider the following backlink factors for an optimized link profile:

– Employ link diversity i.e. a number of different websites should link to our website instead of procuring multiple links from a single website

– Determine the authenticity of domains; make sure you get links from different domains as opposed to numerous links from a single domain

– The anchor text should be varying, exact anchor texts appear unnatural and unauthentic

– Inspect the quality of sites linking to your website, disvow fault links or links from low quality sites

Utilize the numerous tools available to find backlinks such as your webmaster’s tool account, Open Site Explorer, Majestic SEO, among others.

Social media engagement

The success of your website is also dependent on the number of social mentions and interactions it can garner. The more likes, re-tweets, +1, and shares it earns, the more relevant it is considered.

Furthermore, you should also analyze the authenticity of the individuals sharing your website’s content. Social engagements and mentions from influential figures are akin to a seal of approval that enhances the reputation of your website.

4. Competitive analysis

Competitive analysis is the final piece of the puzzle that analyzes your competitions and evaluates how your website compares to them.

Understanding the strengths and weaknesses of your competition can facilitate your website optimization. You can channel their strengths to win over their users and exploit their weaknesses to divert more users to your site.

Website audit is a lengthy and extensive process but it offers valuable insights regarding the internal functioning of your website. It also offers actionable tips about how you can improve your site to enhance your search engine rankings and overall user experience.